Not sure what caused it, would be glad to hear others’ experiences about this. did not work on the actual pages I wanted to scrape.

If you try anyway, you’ll be welcomed by a puzzling #VALUE error. That’d seem like a lot, but the web pages I wanted to store in them were more around 77k characters.

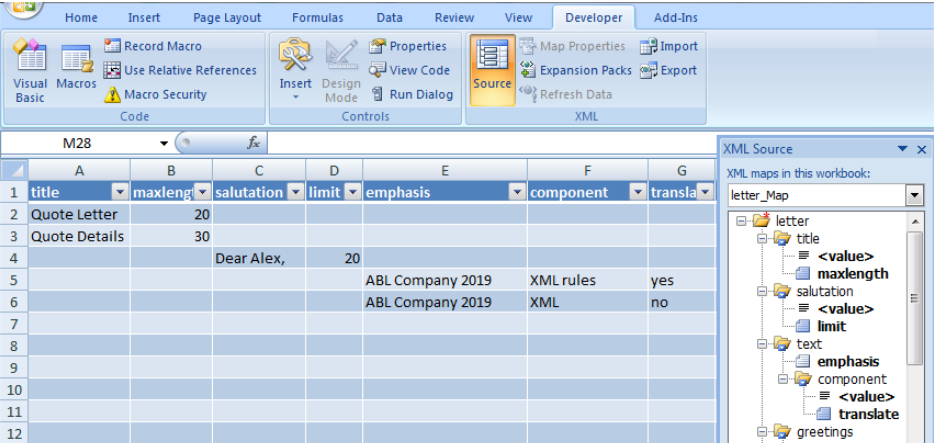

(Note: neither GDocs nor Excel support scraping from websites requiring authentication, for which you’re better off with Ruby gem Watir.) As such, I turned towards Excel instead.Ĭhallenges in Excel included the following:

Worse yet, you can only use such functions 50 times per sheet at the time of writing, while there is no native way to import a web page in one cell to parse its contents from other cells either. Unfortunately, both GDocs’ ImportXML and Excel’s Webservice and FilterXML functions have their flaws. GDocs’ ImportXML doesn’t fully support XPath (1.0) - it doesn’t allow you to pick out a second div using /div, instead returning a block of anything matching what you asked for, making it hard to get only the parts you wanted exactly where you wanted them. Because getting the data where you want it (in your spreadsheet) is half the work. As a spreadsheet geek, I was thrilled to find out about the new functionality in Excel 2013, most notably its new webservice and filterXML functions, which seemed meant to put it back on par with Google Docs’ muc h-acc lai med ImportXML function.

0 kommentar(er)

0 kommentar(er)